I’ve seen a few posts somewhat close to what I am trying to accomplish; but not quite specific to my situation.

Currently have my database on Pinecone, with OpenAI API calls and queries working as normal for single-input prompts/questions. However, the conversational workflows aren’t working properly as I would like to have a continuous conversation with pinecone / follow up questions.

I have a separate workflow for messages added to an existing conversation, where the messages + roles are embedded into a string and sent alongside the prompt to pinecone. However, it is spotty. Sometimes it recognizes the previous message topic if my prompt is specific enough, but if I ask something broader it “resets” and answers a broad answer again. I.e.:

user: what things are red?

assistant: things A and B are red

user: can you tell me more about thing B?

assistant: thing B is red, and is currently listed for sale.

user: how much?

assistant: thing A is listed for $1. thing b is listed for $2. thing C is listed for $4. thing D is listed for $2.

I am curious if anyone has found a solution specific to OpenAI/Pinecone that allows follow-up questions to RAG responses. Essentially, I’ve built the workflows on OpenAI to “create a new thing” on data I pull, but I need to be able to tweak it before it creates the thing. Currently, it will use the RAG to match three similar data things, and then aggregate the data into Tools to create my new thing. But I need to be able to say “I like similar items A and B, but can we omit item C from the new thing” because as it sits, my users are forced to accept Pinecone’s assumptions as it aggregates data for the new thing.

Thanks in advance!

I don’t know if you’ll find the best solutions for Pinecone problems here… I hope you do though.

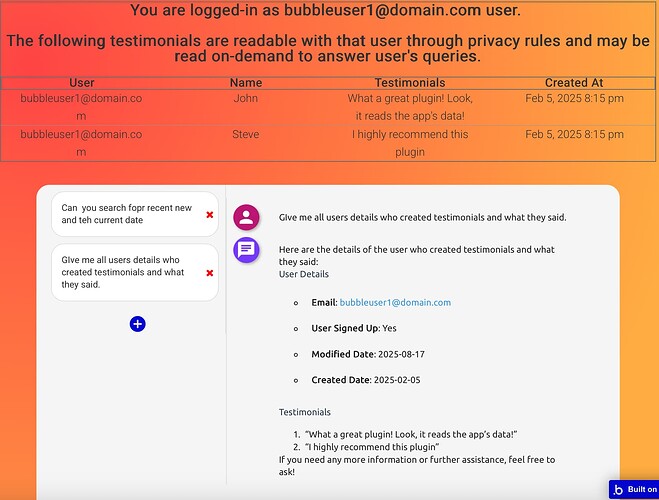

You might want to simplify your implementation, unfortunately I can not offer you more than my OpenAI - GPT Streaming with RAG Plugin | Bubble commercial plug-in which is surely able to “I like similar items A and B, but can we omit item C from the new thing”.

Basic example below, to be tested against your use-case.

Thanks, but I ended up getting it to work natively. By stripping down the convo into user: message | assistant: message and sending it with the query package it worked. But took some play with the prompt. I also rebuilt the page’s WFs to essentially store multiple “characters of AI” based on conversational context, to separate all the prompts and set actions inside the json that when parsed will switch out the “active character”.

i.e. “every couple questions ask the user if they’d like to create a project” and if the user responds yes or asks to create a project, the action parses “action: create_project” which then triggers a “Do when true” action that switches out the “active character” while still sharing all the context

one character reads, embeds, and gathers pinecone data, then another character switches to extract and build with that data. I built a side panel; similar to Claude’s “canvas” that then reveals a template and then the RAG will also store all the canvas data as you build it out, sending it in a loop. so when I tell it “let’s change the price value to the average of these 6 values” it’ll either A) automatically update the json and the parser will update the canvas or B) if it doesn’t have context on what I am asking, it will revert characters back to the pinecone one, gather data, update context, and then switch back to the build character with the new info

I love this solution. Question: How are you validating the JSON before it gets parsed? like, do you have some sort of schema enforcement or are you able to trust the model outputs clean JSON?

1 Like

in my prompt I explain basically “hey im gonna give you a bunch of guidelines and rules, and each has a score from 1-10 on how crucial it is you don’t screw up” and one of them being 10/10 to make sure only valid json is responded. While unplanned, I’ve tested it about ~300 times in the last 24 hours (who doesn’t love refreshing pages and typing new prompts!) and I haven’t had an issue. As a precaution, I went ahead and attached an unexpected error trigger to my parser, so in the event the response is invalid, it’ll break the parser, the parser alerts the error trigger, which stops the workflow and then restarts it.

on the front end, the user interface doesn’t see this and the little spinning loader just does a couple more laps. I also used an HTML element to deactivate the default progress bar on this page. I am also pretty bummed that text streaming will not work for this type of call, so I’ve been spending extra effort on this pages UI/UX