I need some help with server-side plugin. I am trying to create a web scraping plugin that loads a URL and grabs the HTML of certain elements. (Note: It also does other stuff, but I have simplified it until I resolve this issue.)

The code I first developed works for URLs that don’t have a lot of content. However, when I try a URL with a lot of content, the plugin times out. Based on what I have read, I need to use context.v3.async, but I can’t figure out how to get it to work.

I have searched for an answer, but nothing seems to be helping.

Code:

function(properties, context) {

const axios = require('axios');

const cheerio = require('cheerio');

const htmlToText = require('html-to-text');

const url = properties.url;

const limit = properties.limit;

async function getHTML(url) {

try {

const response = await axios.get(url);

return response.data;

} catch (error) {

console.error('Error retrieving HTML:', error);

return null;

}

}

return getHTML(url)

.then(html => {

const $ = cheerio.load(html); // Load HTML using cheerio

const title = $('title').text(); // Retrieve the page title

// Strip content after the '|' character in the page title

const titleTxt = title.split('|')[0].trim();

const subtitles = [];

$('.subtitle').each((index, element) => {

subtitles.push($(element).text().trim());

});

const descriptionTxt = subtitles.join(' '); // Combine all subtitles into a single string

const articleContent = [];

$('div.article, div.chapter, div.part').each((index, element) => {

articleContent.push($(element).html());

});

const htmlContent = articleContent.join('\n'); // Combine all article content into a single string, separated by newlines

return {

"html_title": titleTxt,

"html_description": descriptionTxt,

"html_text": htmlContent,

};

})

.catch(error => {

console.error('Error:', error);

});

}

Honestly, if this is a custom private plugin, for this use case I would offload the scraping onto a server-less environment like AWS Lambda, Azure Functions, or Google Functions. You can then create a plugin action that simply calls the server-less function passing the request URL plus a Bubble endpoint to deliver the extracted data to. Otherwise you are going to get burned by workload units.

Thanks for the reply. I discovered that route as well doing research but trying to bypass it for the time being as it would require a little bit more learning on my part to get setup. Also, I am working on an legacy app and trying to test out an idea. So WU aren’t that big of a deal at this point.

But if the idea works, I would need to spend some time cleaning it up moving forward.

Your main plugin function returns a Promise. Quick fix: return await getHTML(....

Are you using V4? If so, your function(properties, context) should instead be async function(properties, context) and why aren’t you using the now built-in fetch?

Yes, I am using v4. I am not sure what you mean by “why aren’t you using the now built-in fetch?”.

What do I do with the current return that looks like this?

return {

"html_title": titleTxt,

"html_description": descriptionTxt,

"html_text": htmlContent,

};

You’re using axios package, when fetch is available.

My bad, i misread your post. There is nothing wrong with your code if it works for some urls, and also the returned promise is awaited by the bubble code calling your function.

I suppose that if it works for some urls and not for others it’s because cheerio or the loops you are doing are too slow/long for the server action limits.

Aaron’s suggestion seems the right path.

@aaronsheldon

Following this example, I took your advice and set up a Google Cloud Function last night. Now I am figuring out how to pass variables to the Google Cloud Function.

I have converted the code from bubble to Google Cloud Function and can trigger it with a gateway API call. Now I can’t figure out how to pass the body parameters to the Google Cloud Function. Everything I have tried has failed.

Does anyone have an example to follow or help me get the variables in the Google Cloud Function?

Hardcoded Variables in Google Cloud Function:

const axios = require('axios');

const cheerio = require('cheerio');

const htmlToText = require('html-to-text');

exports.extractData = async (req, res) => {

const url = 'https://www.irs.gov/instructions/i1040gi';

try {

const html = await getHTML(url);

const data = await processData(html);

res.status(200).json(data);

} catch (error) {

console.error('Error:', error);

res.status(500).json({ error: 'An error occurred while processing the data.' });

}

};

async function getHTML(url) {

try {

const response = await axios.get(url);

return response.data;

} catch (error) {

console.error('Error retrieving HTML:', error);

throw error;

}

}

async function processData(html) {

const $ = cheerio.load(html); // Load HTML using cheerio

const title = $('title').text(); // Retrieve the page title

const titleTxt = title.split('|')[0].trim();

const subtitles = [];

$('.subtitle').each((index, element) => {

subtitles.push($(element).text().trim());

});

const descriptionTxt = subtitles.join(' ');

const articleContent = [];

$('div.article, div.chapter, div.part').each((index, element) => {

articleContent.push($(element).html());

});

const htmlContent = articleContent.join('\n');

return {

"html_title": titleTxt,

"html_description": descriptionTxt,

"html_text": htmlContent,

};

}

Initialization of API when hardcoding varaibles:

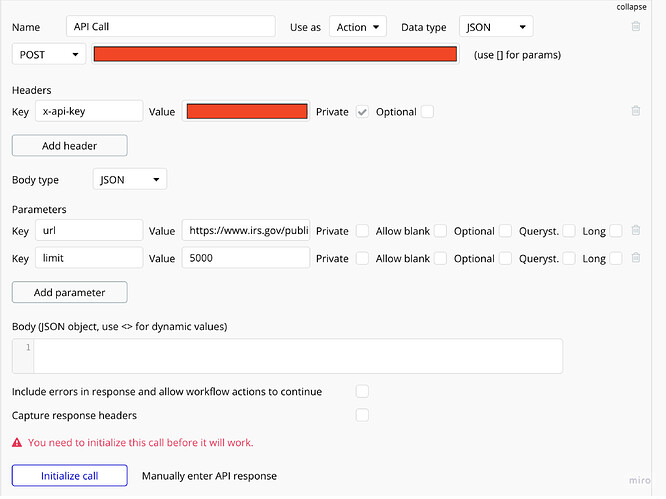

API Setup: