Is there currently a problem with the streaming/typing out the text?

Mine doesn’t seem to be working and just delivers a block text.

Is there currently a problem with the streaming/typing out the text?

Mine doesn’t seem to be working and just delivers a block text.

@Timbo - I don’t think there’s currently an issue.

When you say a “block text”, do you mean you get the full response all at once? Are you using the “Fallback (non-streaming) action”?

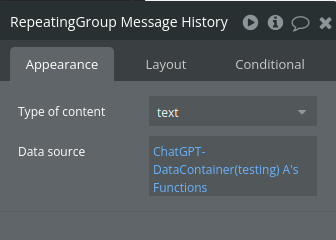

My bad. I turned off the streaming in repeating group. Works fine. Apologies.

Alright, thank you for the info!

Will try your suggested solution, thanks!

@Timbo - no worries! Glad you figured it out!

Hi all,

Just released an update - v 5.16.3

This provides a fix for a bug some users were seeing, in which a second stream would overlap with a first, creating what looks like non-sense, if a “Send Message w/ Server” event was started while another stream was already running.

Now there is a “Force Start” option, which gives you some more control.

Note that you also still have the option of manually using the “Stop Stream” action before you run “Send Message w/ Server”, and using a conditional like “Result of Step 1’s Stop Stream Succeeded? is yes”, which was the previous recommendation. This should work exactly the same as before.

Hopefully this will help folks who have complex workflows,multiple generations triggered in sequence, or started from other non-standard triggers.

Let me know if you run into any issues!

(P.S. - the blue workflow box in the screenshot below is not the one that Step 2 (which is what’s shown) is part of, so don’t pay attention to the conditional on that WF.)

Hey again all,

Just another quick note - I just finished updating the self-hosting package, so if you’re interested in setting up your own server, DM me!

Hello, I am using this plugin just for single prompt (with no history) and the great response streaming feature. However, I would like the user to be able to like / save the response, once the response is completed and the streaming finished, which would then create a database record with the full returned message from ChatGPT (once the response streaming is over). I cannot find a way to save the returning message once it is completed. Could you help?

Lovely, many thanks!

Hey @Guillaume.b,

The best way to do this is to use the “When Message Generation is Complete” event trigger, and then save the Data Container’s “Message History” and “Display Messages” to a database entry (both are list of texts).

You can see a walkthrough of this in the tutorial video: ChatGPT with Real-Time Streaming - Bubble Plugin Setup Guide - YouTube

The conversational style UI section starts around minute 30. And note - this tutorial is a little bit outdated now, but the message history bits are still the same.

Or you can see it setup in the demo app, here: chatgpt-demo | Bubble Editor

Hope this helps!

You’re a star!

Anyone know what this means and why?

The chat works initially but then throws this upon longer chats.

Just going to put this here as I think a lot of you guys are using Pinecone in combination with the plugin

Is the Plugin Down right now ? I get Err_connect_reset messages.

It worked wonderfull until now, although nothing changed.

Type error: Failed to fetch

@benjamin.doerries & @Timbo - I’ll look into these errors. Haven’t seen either before

Hey @fabian.s.menzel - glad you like it!

You can count tokens by using the “When Message Generation Complete” event, which will fire when the stream is complete, and then getting your token counts with the states “Data Container’s Last Request Token Usage - Total” (or Input / Output).

I’ll add a note about this to the documentation tips page for others.

Hi @launchable - In your most recent getting started video (great video), you mention that you are working on a potential solution where a company could host their version of the middleware server. I would like to put my name in the hat in setting this out in Azure or AWS.

This is important to our B2B project as we would need to have as many of the critical components under our management as possible.

Hey @tpolland - That’s available now. I’ll follow up in DMs.

Hi all. Partial / timeline update: For those of you wanting to use Function Calling, the functionality is nearly ready, and should be released later this week

At about 16:56:45 BST (or just after), the request started fine, then paused for a few seconds, then continued (with all of the text presumably sent during the pause missing) 5.15.3. Hope you can find it in the logs haha