Hello, I am experiencing an issue with server capacity and maybe someone can give me an advice on how to solve this.

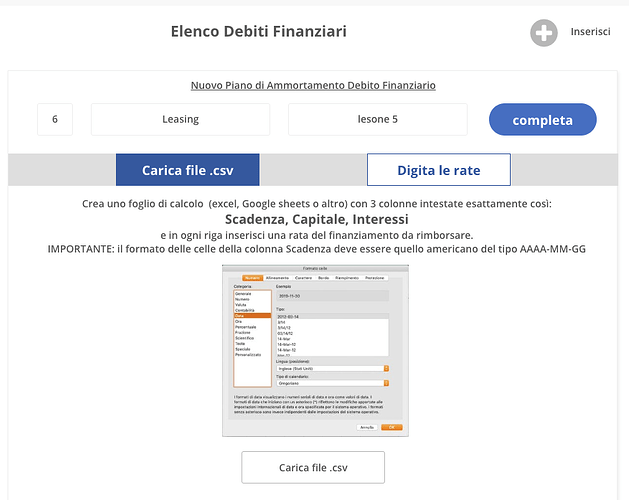

This is the use case: users can input a new bank loan (saved in a thing called FinDebt Head with just a few information like name of the bank and loan type) and then can upload a .csv file (3 columns with due date, capital and interests). I am testing the app with a 35 rows file (I know that there is a limit of 100 rows, which is fine with me).

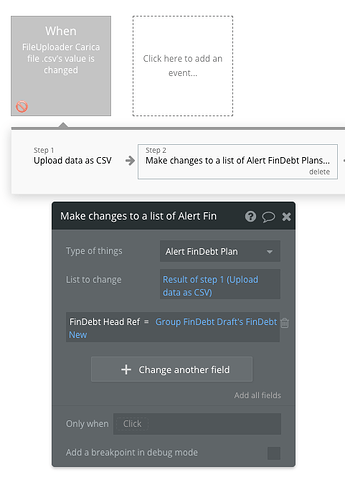

First scenario: on uploading the file a workflow runs with the upload .csv action and right after there is another action “update a list of thing” in order to give to every record of the loan repayment plane just uploaded the proper reference to the bank loan (FinDebt Head).

This setup works fine but Bubble it takes really too long to write 35 records and update the cross-reference. Since the bad user experience I have tried to find a workaround.

NOTE A) = yesterday I have spent hours trying to find a way to parse the .csv and then import the records as json through the API connector, but this is way too complicate for me and I was not able to succeed (if anyone can help I really appreciate).

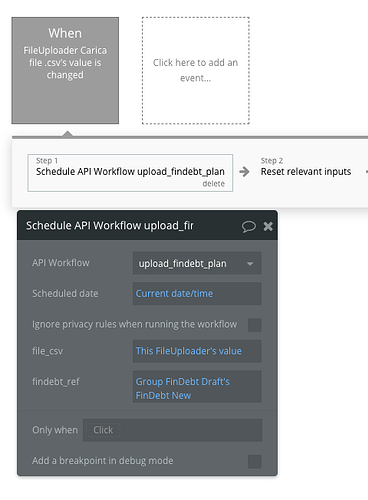

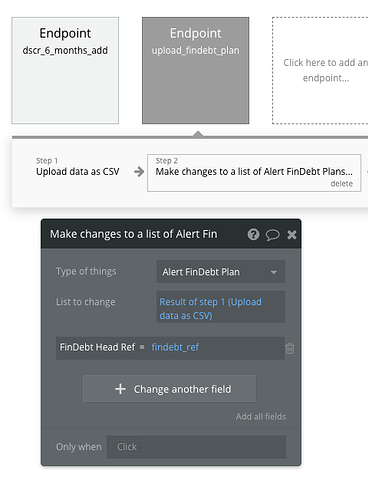

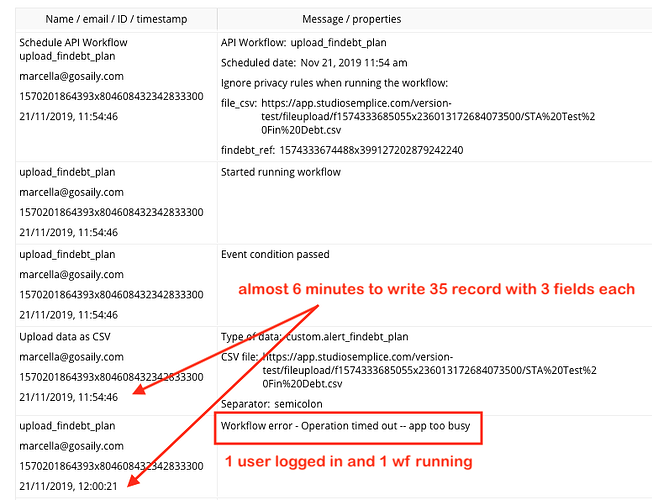

Second scenario: I thought that instead of blocking the user experience just waiting for Bubble to finish the workflow, I could use a API scheduled workflow. So I set up an endpoint with the same action used in the normal workflow. On the upload event, the endpoint is triggered, the .csv is uploaded, the loan plan created and the loan bank reference updated.

Here it comes the problem: using the API workflow the system goes out of capacity after uploading the .csv and there is no way to run the “make changes to a list” action. To prove that this was the only problem I activated a temporary boost on capacity and no error occurred (it is always slow but at least it goes through).

I would like to share with you this situation and ask comments and advice about this:

-) why the same actions do not use all the capacity in normal workflow while they do using API workflow ? is there a better way to manage this kind of actions ?

-) if there is not a way to upload quickly a few tens of records, is it correct to use an API workflow as a workaround or there is another method ?

-) most of all, if running just one single workflow takes all the server capacity what is going to happen in production if 10 or maybe 100 users run simultaneously one workflow each ? here it is not just a matter of upgrading to the Professional Plan but I seriously wonder how much is gonna cost all this additional server capacity.

Thank you in advance for any reply.

Stefano