Over the last 24 hrs my client and myself have had quite the surprise from bubble. It was bound to happen eventually but WU overages finally hit hard but the logic behind it makes little sense.

In short, we have a list of 10,500 “businesses”, we have a backend workflow setup to do a few things.

-

Use google places API to retrieve the placeID based on the city, state, biz name.

-

Retrieve google business photos API to retrieve the photos of the biz from their profile using the “photo referenceID” returned from prior step.

-

retrieve the phone number from the “google places details” api.

-

Trigger a “Dataforseo” API to scrape ALL of the google reviews for each business based on the placeID retrieved in step 1.

-

Schedule a retrieval of the scrape for +1 hr later and create a new review in the db for each scraped review and update the review average value on the biz. (1500 reviews per biz max).

We expected those to be pretty WU heavy of course but the actual results of WU usage made little to no sense in my opinion. In theory you’d expect the google places calls & creation of reviews to be by far heaviest part.

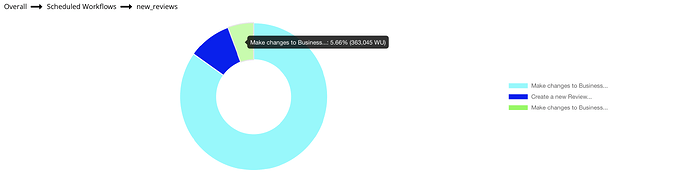

What ended up being the heaviest part? Using make changes to business (rating) results from step 2(list of reviews for that biz):average.

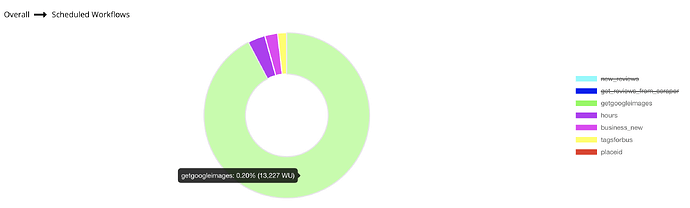

Here’s some statistics from our panel.

Total WU used: 6.679M

retrieve places ID’s: 423? (it updated 10,500 records and spent 423wu? ok.)

Used to retrieve PlaceID’s and photos: 13k

New_review workflow 6.4M

Clarification of my involvement of the project: I was brought in to handle the placesID and tech SEO side of the project, review scrape setup & retrieve was already in existence and instructed to use.

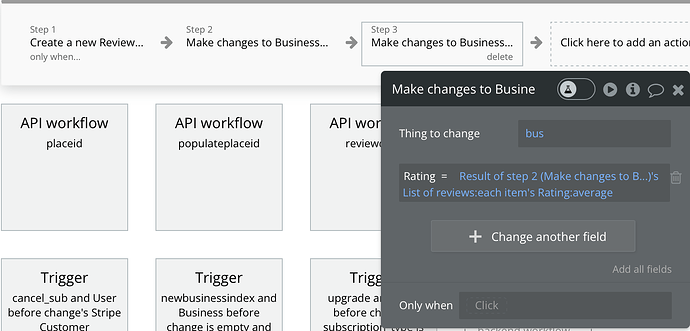

Inside of this new_review flow there are 3 actions:

1: create new review

2: +1 biz total review count & add step 1 to nested “review list”

3: update the business “rating” field. (:average of all reviews in list) (ideally this should’ve happened at the very end a single time not after every review, but again, review part of this was not my setup)

In the end it seems something is off here for a few reasons.

Should a bubble :average operator really account for 84% of 6.6M total WU usage when all other flows are far bigger, I guess it COULD make it this costly since it’s got to pull the full list of reviews as they’re using nested data? However this doesn’t explain why 10500 placesID which included 3x google API calls and 3x data changes + photo creation recursive flow only account for 423 usage.

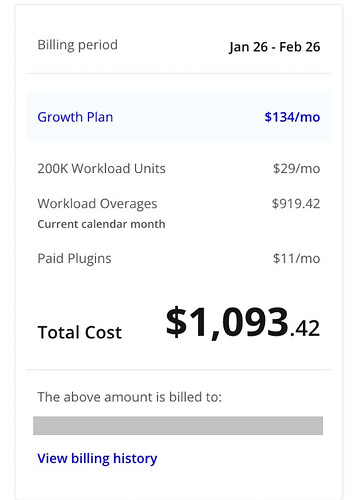

Additionally the overage charge last night at 8:30PM-9PM when all flows ended was $485.

It wasn’t until 3:05PM today when we got a workload unit spike email & when checked had a new $1,093 overage however if you look at the chart there was 0 usage since roughly 9:30PM last night and according to bubble the WU and overages are all “real time”.

Bubble real time reporting thread

Quick edit: although it doesn’t make a massive difference, the 10,500 total businesses is actually only 9200 because the other 1300 errored out due to dataforseo limit and never made it to the review flow.